I am not going to talk about Type I and II errors and stuff like that you can get from any ol’ research methods book. These are hard won insights, lessons learned that over time have been hammered into my head. These come from hundreds of interviews and observations and by watching and talking with people. These are the mistakes that can keep you from successfully “getting inside their heads.”

Mistake 1: Not thinking hard enough about sampling. This is the most important thing. Especially if you have a number of “researchers” contributing. You gotta think about who you are going to talk to or watch.

It is possible to get good results even if you have inexperienced interviewers. It is possible to get good results even if you have the wrong questions. But it is almost impossible to get good results if you aren’t asking the right people.

For good design research you gotta be talking to or watching the right people. You mess this up and no matter how well you do with the rest of your plan, tasks, and design, well you might as well be making up your data. Who knows, your made up data is probably better!

Mistake 2: Not doing any Pre-thinking. If you don’t have a clue about the decisions your decision-makers have to make, then you aren’t giving them as much value as you could be.

Spend sometime talking with your clients, stake-holders, the people who are going to be taking your research and using it. What are the decisions they are going to be making that you might be able to help collect data on. If you have in mind the decisions down the road, it is much easier to set up your tables, your hypothesizes, and your null hypothesis before so as to use the time as a naturalistic experiment to help them in making their decision. It is ten times harder to do this after the fact, and often impossible because of time constraints than it is if you set it up ahead of time.

If you can answer one or two or even god forbid three questions that stick in their craw you are rocking and rolling. Pre-thinking your decisions leads to pre-thinking your analysis, leads to pre-thinking your data collection. Pre-thinking is Good.

Mistake 3: Trying to be unbiased. Don’t try to be a blank slate. It is a waste of time. I say “Don’t think about pink elephants”, and what jumps right into your minds-eye. Yep, you got it those darn pink elephants. Instead, work with what you know already.

Even if you aren’t a professional generalist you know something. Or at least you think you know something. Spell it out. Write it down. This is your initial bias.

A good anthropologist is not devoid of bias. A common misperception is that a good anthropologist is someone who is devoid of bias, someone who has purged their system their brain, their thinking of bias. WRONG! a good anthropologist is AWARE of their biases and takes them into account. It isn’t some relativist psycho-babble.

Keep a diary AND field notes. Use your bias. Become aware of things that excite you, piss you off. I always say you know you are finding good stuff if strong emotions are involved. Try to understand that YOU are the instrument. You don’t have to go native or become like the people you study. You are you, you aren’t trying to become them. You are trying to understand them. Use your built in radar of emotion to your advantage.

Mistake 4: Not connecting the Pictures Together Many times we focus so much at the mirco level of the customer that we don’t understand the clients macro-view.

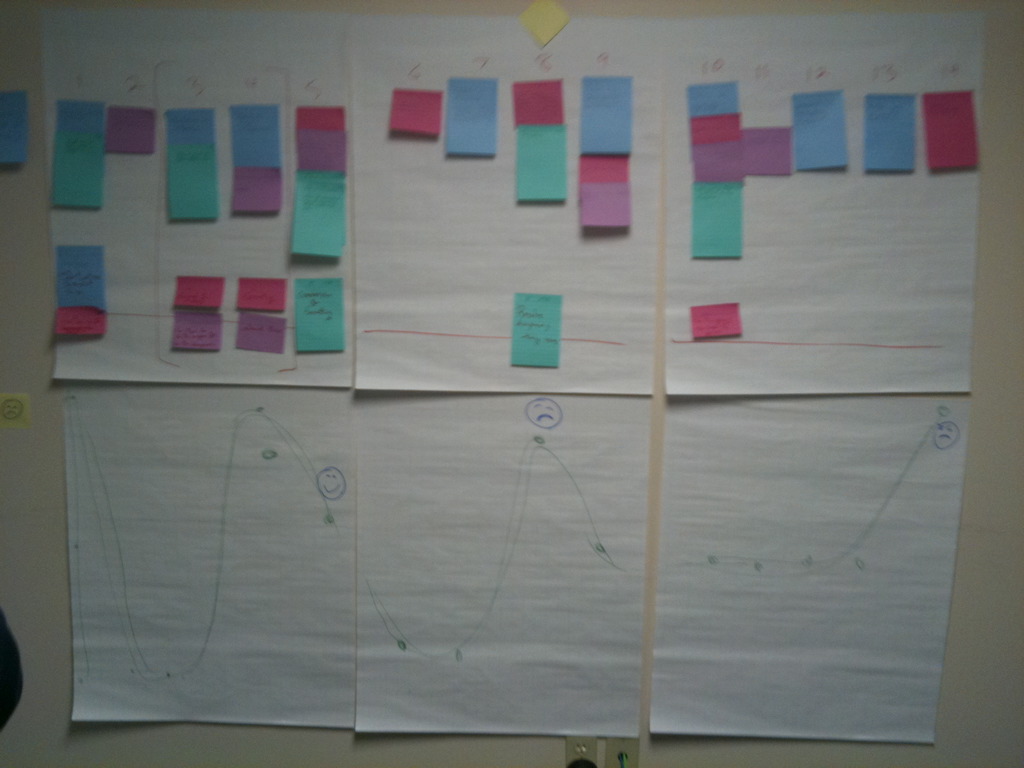

Document the macro-system. What is it you are seeking to manage or predict. Seek to understand the system. What are you making or saving? How does the system work? What makes it expand or contract. What makes it go up or go down.

Build a functional model of the business side of things. Build a process model of the micro-customer side of things. Show the connections between the two. The amount of insight you can generate for the business owners is immense and just doing this is sometimes worth the entire cost of the project. This helps makes your work easier to understand because you start from your client’s current understanding and build upon that.

You don’t want to dunk them into the cold water of a different world-view with nothing for them to hold onto. You need to ease them “inside the head” of the customer. The cold hard fact is that they usually don’t care about the customer experience except for how it impinges in a functional sense on the business. So make it clear, make it easy, and connect the dots. It might not be the warm and fuzzies, but it will get clients very excited about the lives and world-views of their customers.

Mistake 5: Having More than Five Variables. Ok, so here is an easy one. Focus in on 5 conceptual variables. Not less than 3. Not more than 5.

Usually we think that the more variables we are looking at, the more complicated the system and of course we want to covey the complexities of a customer’s world. Unless you are doing extended fieldwork measured in months and years, stick to my advice.

Try to determine 5 different variables at the beginning of your work. Code your data with those 5 variables. These should drive down to operational definitions. Don’t worry about scaling theme, likert-izing them. If you can show how one thing influences another thing and if it is a positive or negative influence you are a good way to building a powerful model of what your client wants to change.

At least one of these variables has to be the thing that the client or customer wants to change, influence, manage, or predict. The other variables can be from the perspective of the informants. But the operational definition better be understandable to the client. Make sure to use these variables in your Big Pictures you build and it will all start to weave together in a coherent story.

To conclude, these are some of the things I have learned over my almost 20 years of investigations. And it has taken me a long time to master them. They are much more than methods, they are skills, and only through experience do you have any chance at getting better at them. But it is a crap-shot at improvement if you don’t reflect on them. I hope that if you haven’t thought about these before that on your next project you will and start to hone your skills and get better at design research. You shouldn’t be satisfied at just knowing a method, but you should hone your skills, better yourself, better your research, and better your results, and in turn better the design that comes out, and better the lives of the people who the design effects. They are not methods or tricks, they’re how to reflect on your design research and how to skill lessons. Once you understand and apply these to any design research problem you are investigating, uncovering customer insights becomes much easier.